The resources for transformative technologies such as artificial intelligence often lie with a few, large technology companies such as Google, Meta or Microsoft. This can pose significant problems for the general progress of research, resource use and environmental protection, but also regarding ethical and social issues. Machine learning applications in particular, which are used worldwide to translate, classify and analyse texts, can reproduce implicit biases based on the data with which they are trained.

In technical jargon, such programmes are called Large Language Models (LLMs). These are algorithms that learn statistical links between billions of words and sentences in order to create summaries and translations, answer content-related questions or classify texts. To do this, they use an architecture inspired by human neural networks. The algorithms are trained by adjusting the values – the parameters -, hiding individual words and comparing predictions with reality.

Large technology companies use LLMs for everyday applications such as chatbots and translators. But they also have a great influence on the content with which the AI is trained. This can then, for example, mimic prejudices that resonate in texts written by humans, such as racist and sexist links. It can even encourage abuse and self-harm. Moreover, the technologies do not understand the basic meaning of human language, which can lead to AI producing incoherent texts – the models are, after all, only as good as the datasets on which they are based. In addition, the development of these AI-based programmes usually comes at a high cost, financially and environmentally. This is because the enormous computing power required for training also produces a not insignificant ecological footprint.

How Can AI Language Models Become More Accessible?

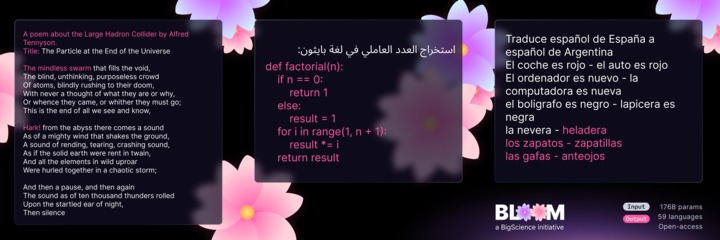

BLOOM – a new open source AI for speech recognition and text processing – was developed in one year by about 1000 researchers from almost 60 countries and more than 250 institutions. The comprehensive LLM is multilingual and especially designed to be transparent, usable by all and free from the strong influence of “big tech” to help reduce implicit biases in AI. The aim of the project is to better understand AI, to make it more open and thus to steer machine learning in a more responsible direction.

The alliance of different scientists and institutions is called BigScience and has trained BLOOM with computing power worth 7 billion USD from public funding. The researchers selected almost two thirds of the database with 341 billion words from 500 sources themselves. The result is an LLM with 176 billion parameters that can generate texts in 46 natural and 13 programming languages. The AI is to be used in various research projects, to filter out information from historical texts or to carry out biological classification.

The researchers are mostly academic volunteers, including ethicists, legal scholars and philosophers, but also employees of Google and Facebook who are independently supporting the project. The languages for training the AI were selected in close consultation with native speakers and country experts. Likewise, the entire development process was multi-perspective and aimed at considering possible prejudices, social impacts, limitations and restrictions of AI, but also potential improvements.

The problem of CO2 emissions, which are usually very high with AI, was also especially strongly discussed in expert circles. BigScience has not yet published a figure on this, but plans to announce it.

Innovative Approach – More Diverse Outputs

Ultimately, a fundamental factor in the development of BLOOM was to incorporate many and more diverse characteristics into the training of the AI: How pronounced are the stereotypical linkages? How strong are the biases in the functions of the LLM towards certain languages? The researchers hope this will equip the AI with a deeper understanding of language, ultimately leading to less harmful outputs from AI systems.

In an interview with The Next Web, Thomas Wolf, co-founder of Hugging Face, the startup spearheading BigScience, explained: “Big ML [machine learning] models have changed the world of AI research in the last two years, but the huge computational costs required to train them have meant that very few teams actually have the ability to train and research them.” This is also why everyone should be able to use the new system if they agree to the terms of the Responsible AI License, which was developed during the project itself. However, downloading and running the AI requires a lot of hardware power, so the team is also working on a smaller, less hardware-intensive version.

The scientists want to continue experimenting with the basic concept in the future and are optimistic that the capabilities can be further improved, for example with additional languages. Similar projects have already successfully applied this transparent approach of digital technologies to climate protection or supply chains. BLOOM can therefore serve as a starting point for future, more complex structures and systems that also promote more transparency and openness in machine learning.