Currently, hardly other technology is as controversial and discussed as that of artificial intelligence (AI). Intelligent algorithms and machine learning are already being used in many areas – and developments are still in full swing. Expectations are high that AI will drive product innovation and open up new markets and research prospects through speech and image recognition, as well as the creation of forecasts and complex models. In our German publication Greenbook (1): Artificial Intelligence – Can we save our planet with computing power? we were able to show how AI-based systems are used in environmental and climate protection and the potential they have to advance and improve the energy transition, circular economy and nature and species conservation.

At the same time, critical voices are increasingly pointing out the ecological and ethical problems that AI processes entail, and regulatory options are also being discussed at the political level.

The SustAIn project aims to develop criteria for the sustainability assessment of artificial intelligence applications and survey the sustainability effects in an exemplary manner. The joint project is funded by the German Federal Ministry of the Environment (BMU) funding initiative AI Lighthouses and is carried out by the Institute for Ecological Economy Research (IÖW) together with AlgorithmWatch and the Distributed Artificial Intelligence Laboratory (DAI Laboratory) at the Technical University of Berlin. Friederike Rohde coordinates the sub-project at the IÖW.

Friederike, how did the idea to develop a sustainability index for AI come about?

We started in September 2019 with the Bits & Trees Forum, a series of talks designed to more strongly bring the discussion about the sustainable design of digitalisation processes into the political discourse. At the kick-off event, we talked about the topic of AI and sustainable development and noticed that there is still a lot of need to link these topics. This is not only about the social impact, but also about the ecological impact of AI-based processes. So when the call for proposals for the AI Lighthouses came up, we teamed up with AlgorithmWatch and the DAI Lab and developed the idea for the Sustainability Index for Artificial Intelligence.

What is the goal of SustAIn?

Our goal is to influence the social discourse on AI and the design of these systems, and to show what the development, implementation and use of automated decision-making means for society and the planet. There is currently a lack of systematic evaluation options and, above all, of data on the basis of which such an evaluation would be possible at all.

In the project we work in a very interdisciplinary way and include a core team of eight people from the fields of computer science, software development, sociology, economics, political science, as well as media and sustainability science. Together we want to develop a set of criteria for the sustainable design of AI-based systems and also investigate this qualitatively and quantitatively in three case studies. We aim to publish the results in annual reports.

Why should we regulate applications with AI?

There is already a long discussion about ethical aspects of artificial intelligence and the impact of AI-processes on social stigma, discrimination or gender stereotypes. The main issue here is to ensure that AI systems do not reproduce the social prejudices that are, after all, reflected in the data sets.

Furthermore, I think it is important to think about how the data is actually collected and what it is supposed to represent. We can’t simply say that the data reflects reality, because they are often already an interpretation of reality. If algorithms then make far-reaching decisions based on this data, such as selecting applicants or approving loans, that is of course highly problematic. I think the social consequences of using these automated decision-making systems can be very far-reaching. And we are just at the beginning of the debate.

In February, the European Commission presented a legal framework for AI, which already contains many important aspects, such as the high quality of data sets, traceability or robustness and accuracy. As is so often the case with these principles and their implementation, the devil is in the detail and the question is what specifically the diverse actors and organisations that use and develop AI systems have to do to comply with these principles.

What are the environmental and social challenges of intelligent algorithms?

In my view, an important aspect that should be considered in the discussion about AI systems, with regard to social and ecological challenges, is the relationship between effort and benefit, for example the energy consumption of very large Deep Learning models. Quite high figures have been circulating here, published by American researchers. But these are often misrepresented or not interpreted correctly. This is because only the neural network architecture searches (Neural Architecture Search) of certain existing models, such as Transformer or BERT were considered. These numbers are then often used to argue points, however they are not fully representative. It’s like comparing the fuel consumption of a Formula One racing car with that of a conventional car. According to the study, a sophisticated model like BERT consumes about 0.65 tonnes of CO2 during training, while a frequently used model like Transformer, for example, consumes about 12 kilos of CO2.

The energy and resource consumption of the models is of course an important issue. But we also have to ask where all the hardware on which these computationally intensive processes run actually comes from and under what conditions it is produced. Here, we are still very far from sustainability and these very indirect effects are often not considered.

It is also still unclear how the relationship between training and inference (i.e. the execution of the decision-making process) affects energy consumption. Currently, it is assumed that 90 percent of the costs for the infrastructure are accounted for by machine learning inference, i.e. the execution in the respective application field. Training only accounts for about 10 percent. Whether this can be directly translated into energy consumption is unclear, however.

With the social aspects, there are of course very far-reaching consequences, which I already mentioned. The main question here is what impact automated decision-making processes have on social integrity and what role we as a society want to give to data-driven control of decisions and processes.

How will you arrive at the sustainability criteria?

We refer to existing discourses both in science and in practice and have tried in a first step to crystallise some criteria from the state of discussions and research. At the moment, we have developed a first set of 16 criteria, which are still being supplemented and refined by various aspects. The difficulty is to make these criteria assessable by means of concrete indicators and measures. We are currently thinking about how to do this in a meaningful way and what kind of evaluation grid to use. At the moment we are using a step-by-step model, but that could change.

When we have completed the sustainability criteria, we want to develop an evaluation system and conduct case studies in the areas of energy, mobility and online shopping. Here we want to take a closer look at the relationship between positive effects for socio-ecological transformations and risks for people and the environment in the respective areas.

In our so-called “Sustainable AI Labs”, we try to work as much as possible with actors from practice and experts in the field. In our first lab, we have already received initial feedback on our approach and we want to continue this. After all, we want to develop something that is connectable and makes a meaningful contribution to making AI systems more sustainable in the future. To this end, we also want to work out recommendations for developers and political starting points so that sustainability goals are given greater consideration.

In which sustainability areas do you see particularly high potential for AI and how can we make the best use of it?

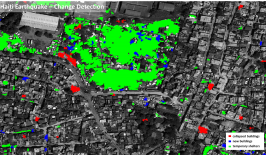

In the energy sector, for example, energy savings can possibly be achieved through “forecasting” or intelligent building control. There is also great potential in the area of earth system monitoring. Nevertheless, we must always be aware that in order to achieve a socio-ecological transformation, social change processes are always necessary in the end. It is about new patterns of thinking or new practices and often also about challenging prevailing structures. Of course, there are limits to the use of AI and it must be ensured that it is used in a way that is oriented towards the common good.

When can we expect the first results of your project?

We plan to publish the first “Sustainable AI Report” in spring 2022, likely in English and German. We hope that this will enable us to make an important contribution to evaluating AI systems in terms of their sustainability. In any case, the project shows that an interdisciplinary perspective and exchange on this topic between science, civil society and practice is very important and that there is still a lot of need for discussion and research.

This is a translation of an original article which appeared on the German RESET website.