Since the launch of the chatbot ChatGPT in November 2022, there is hardly any getting around AI systems in the media as well as in everyday life. The new programmes, which seem to react intelligently and humanely to questions and inputs, could be set to change the job market as well as the world at large, a fact that’s striking fear into the hearts of several high-profile scientists. We also put ChatGPT to the test, asking it complex climate questions. Its answers raised a few question marks at least.

Amidst the hype about the new language models, it is quite astonishing how few people question ChatGPT and its competitors in terms of their energy consumption. According to studies, even the training of new language models requires huge amounts of energy —accordingly releasing plenty of emissions.

How do language models work in the first place?

To understand to what extent chatbots like ChatGPT impact the environment, we first need to understand how they’re developed and how they work. We can roughly distinguish between a language model and a chatbot using the example of ChatGPT, which, as users, we have the possibility to ask questions randomly.

Its answers are based on the language model GPT-3.5, which was trained with data from September 2021. The data set consisted of books, Wikipedia entries, poems and a vast array of other text content. The language model had to understand this content through machine learning in order to be able to respond to queries today.

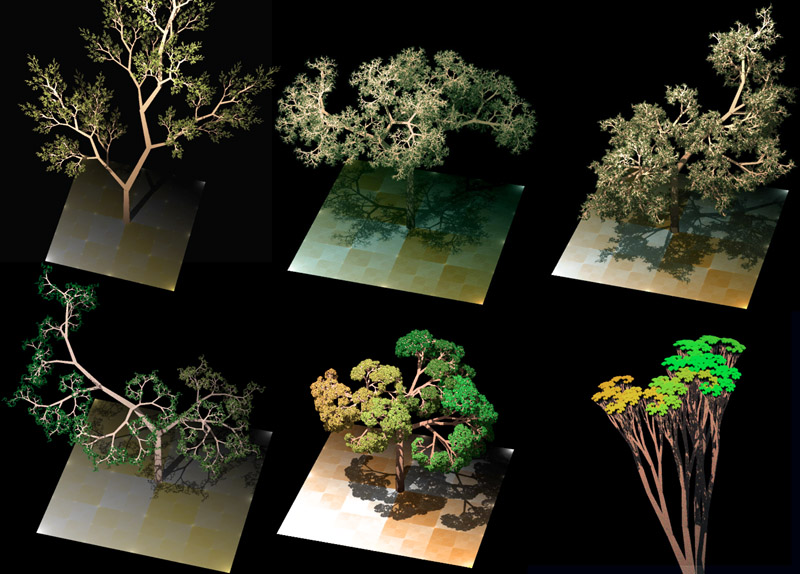

In this training, a language model learns at the beginning to find patterns as well as differences in the text pieces. This is followed by guided training practice.

How to train a language model

Version 3 of Chat-GPT had 175 billion parameters available for initial training. With its successor, GPT-4, there were already 100 trillion parameters that served as the basis for training so-called transformers.

How exactly transformers are able to understand text content is less important here. What is relevant, however, is that their training requires a lot of computing power over a long period of time. Companies like OpenAI use supercomputers for this. According to an article from February 2023, ChatGPT was trained on a total of 10,000 graphics cards. As in conventional computers, these graphics cards have to be embedded in systems with processors, main memories and other components. Quite early on, OpenAI received support from Microsoft, which provided the company with the necessary computing power via its Azure cloud.

Supercomputers were specially developed for this purpose, whose graphics processors could be linked via extremely high transmission bandwidths. According to Microsoft’s Senior Director Phil Waymouth, training ChatGPT required “exponentially larger clusters of networked GPUs than anyone in the industry had ever attempted to build.”

Despite this enormous computing power, experts estimate that OpenAI needed several months to train Chat-GPT 4.0. OpenAI keeps the exact information about the training of its language model and the information required for it a secret. However, a study from 2019 indicates that the training of large language models releases approximately as many greenhouse gases as the production and operation of five combustion cars over their entire lifetime.

And this computational effort takes place even before users can ask their first questions to a new AI chatbot.

Queries significantly more computationally intensive than a Google search

After training, a language model such as OpenAI’s GPT-3 must be used in an application. In the case of the AI chatbot ChatGPT, which millions of people have now tried out, this is done via a voice-based application. However, the software giant Microsoft, which invested in OpenAI at the beginning of 2023, is now even integrating the language model into its own search engine, Bing.

Although language models could also be operated on their own hardware, most users choose the path via web services. In the case of Chat-GPT, OpenAI provides its chatbot free of charge, hosted on its own servers and via Microsoft’s Azure cloud.

If we type a request into the command line of ChatGPT in Germany, it is transmitted to the server farms in the global Azure cloud network and processed there. And although Microsoft advertises that “the Microsoft Cloud is between 22 and 93 percent more energy efficient than traditional enterprise data centres, depending on the specific comparison”, the energy consumption per query is estimated to be many times higher than typing a search query on Google.

The comparison shows quite clearly how much higher the computing effort of AI chatbots currently is compared to search engines. In addition, GPT does not even generate the results from real-time data, but from an outdated data set. So we get (currently still) worse results that require significantly more computing effort — even if they are presented in a more engaging way.

How can Language Learning Models become more energy efficient?

Even if the current AI boom levels off, it is very likely that companies will integrate language models or other AI applications into more and more programmes, computer systems and web applications. In the future, we may even no longer be able to truly understand when information provided is pre-programmed and when it comes from a language model.

This means that there is an urgent need for more energy-efficient solutions that are more power-efficient both in training and in use, especially since future systems will have to access real-time information from the internet in addition to their training data in order to avoid constantly providing outdated data.

Researchers at Berkeley University, together with Google employees, have presented best practices that could reduce the amount of energy used in ML training by a factor of 100. Carbon dioxide emissions could even be reduced by a factor of 1,000.

Google’s own language model, GLaM, was the most powerful language model trained in 2021. Although it was developed only one year after GPT-3, Google needed 14 times less carbon dioxide for its training despite its higher performance. From this, the researchers conclude that the energy hunger of new AI systems will first stagnate in the next few years and then decrease further.

However, efficient training does not yet mean that the hunger for energy during use is also reduced. Researchers are working on several possibilities that are inspired by a particularly efficient neuronal network: the human brain.

GLaM is divided into 64 smaller neural networks for this purpose. This means that the language model does not necessarily have to answer queries with the entire data set; instead, it can leave a large part of its networks inactive. In tests, GLaM used only eight percent of its more than one trillion parameters per query. Accordingly, it required significantly less computing effort to be able to respond to queries with sufficient quality.

Conscious use of AI systems is becoming increasingly important

Future AI systems will, we can assume, work much more efficiently than chat GPT and GLaM today. However, this will not make the conscious use of such systems any less relevant. On the one hand, users must be sensitive to whether the information they need actually has to be generated by a language model or whether their own research might not be sufficient.

How can artificial intelligence be made sustainable?

What potential does AI have for environmental and climate protection? How can AI applications themselves be made more sustainable? And what can companies, developers and governments do to achieve this?

We talked about this with Stephan Richter from the Institute for Innovation and Technology: How can artificial intelligence be made sustainable?

On the other hand, it must remain transparent in the future when programmes process search queries or processes offline and when they process them online via language models or other AI systems. On the other, the trend is currently moving in a different direction. A corresponding political framework is therefore indispensable in order to keep the energy consumption of current and future AI applications as low as possible already during training.