To most people, the name Nvidia does not likely mean much, but ask a video gamer, and you’ll probably get a much more enthusiastic response. Since 1993, Nvidia has been at the forefront of the video game hardware market, and has developed a range of high-end graphic cards for gaming PCs and consoles.

Generally speaking, Nvidia has more in common with Call of Duty than climate research, but the US-based corporation is now looking to apply its expertise in simulation and processing power to helping the environment.

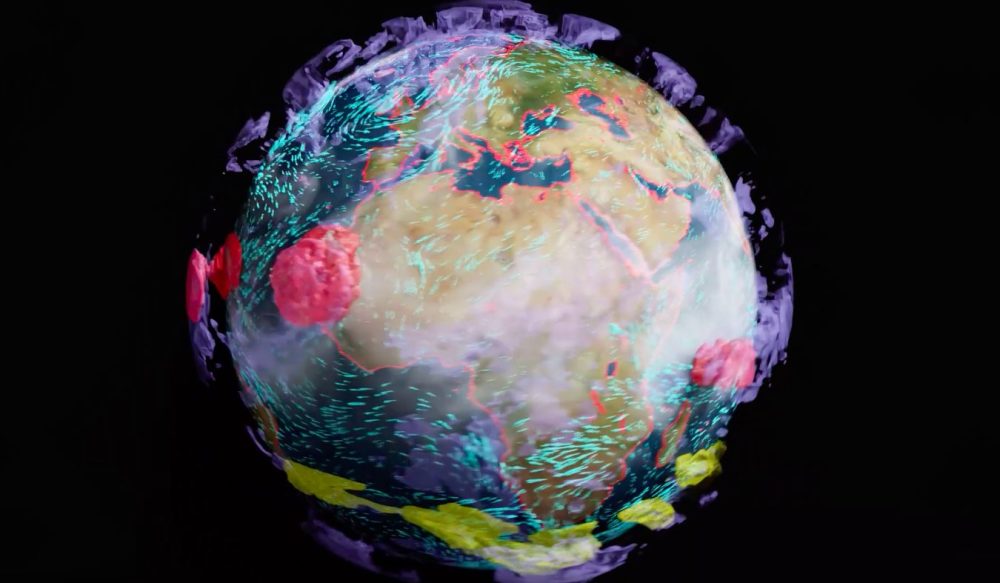

Nvidia recently announced Earth-2, a new supercomputer which they claim can realistically model the Earth to a metre scale. The project is still very much in the development stage, but already it is being suggested such computer simulations could greatly assist climate research and environmental policy.

Computer simulations of the Earth are not entirely new, such as the Earth Simulator series developed in Japan from 1999. But currently, most climate simulations have a resolution of around 10 to 100 kilometers. Although this is useful for wide ranging weather patterns and systems, it does not necessarily address the full spectrum of our environmental woes.

By zooming into a metre-scale resolution, Earth-2 could theoretically more accurately simulate elements such as the global water cycle and the changes which lead to intensifying storms and droughts. With metre-scale resolutions, towns, cities and nations will also be able to receive early warnings about climate events, and plan infrastructure improvements or emergency response on a street-by-street, or even building-by-building basis. Eventually, such simulations could plug into decades-old climate data and provide a broad ‘digital twin’ of the Earth for researchers to experiment with, such as examining how clouds reflect back sunlight.

Reaching this new resolution would require machines with millions to billions of times more computing power than is currently available. And with processing power generally increasing ten times every five years, that would take millenia to achieve. However, Nvidia claims this giant leap is possible if several different technologies and disciplines are combined. By bringing together GPU-accelerated computing (Nvidia’s core competence) with deep learning artificial intelligence and physics-informed neural networks,vast amounts of information can be run through giant room-sized supercomputers.

The plan is for Earth-2 to then be plugged into Nvidia’s Omniverse platform (not to be confused with the ‘metaverse’) which is an open platform developed for virtual collaboration and real-time physically accurate simulations. Although largely geared towards Nvidia’s main market – video games and media – such powerful simulations can also play important roles in scientific research, architecture, engineering and manufacturing.

Nvidia already has some experience in this field. Earlier this year, they unveiled Cambridge-1, a 100 million USD supercomputer constructed in the UK. Built in only 20 weeks – as opposed to the two years usually needed for supercomputers – Cambridge-1 aims to provide a collaborative simulation platform for healthcare research, universities and hospitals.

Rise of the Supercomputers

At their essence, supercomputers are essentially computers with an extremely high-level of performance compared to a general desktop computer. Whereas most consumer computers deal in ‘millions of instructions per second (MIPS)’, supercomputers are graded by ‘floating-point operations per second (FLOPS)’. To put it simply, supercomputers are hundreds of thousands of times faster and more powerful than a generic computer, with the most powerful supercomputers being able to perform up to 27,000 trillion calculations per second.

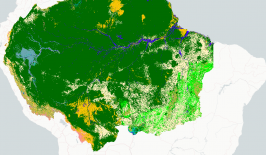

This is the kind of power you need if you want to model increasingly complex simulations, such as the physics of space or engage in quantum mechanics or fission research. As the technology develops, these simulations can be run at increasingly larger resolutions and scales. In the 1960s, climate modelling was only possible in regards to the oceans and land vegetation. Now, models have been developed for ice sheet movement, atmospheric chemistry, marine ecosystems, biochemical cycles and aerosol dispersal, among others.

For example the Plymouth Marine Laboratory makes use of the Massive GPU Cluster for Earth Observation (MAGEO) supercomputer, which allows it to predict wildfires, detect oil spills and plastics, as well as map mangroves and biodiversity. Today, there are around 500 supercomputers of varying power across the globe.

But, supercomputers are not just ‘super’ in terms of processing power, they’re also super-sized. Usually, supercomputers consist of large banks of processors and memory modules networked together with high-speed connections. The largest supercomputers also utilise parallel processing, which allows a computer to tackle multiple parts of a problem at once. This means supercomputers are usually the size of entire rooms.

But, all this processing power also comes with massive energy demands and huge quantities of wasted heat. This means supercomputers themselves are not necessarily the most environmentally friendly research tools. For example, Japan’s Fugaku supercomputer uses more than 28 megawatts of power to perform its function. That’s the equivalent of tens of thousands of homes in the United States. Australian astronomers calculated that supercomputer use was their single biggest carbon producer, three-times that of flying. Of course, the amount of carbon generated will depend on how power is generated within a nation in the first place, with greener nations’ supercomputers generally producing less carbon.

However, steps are being taken to improve their energy efficiency. For example, more efficient code will mean less processing power will be needed, dropping energy demands, while building supercomputers in chillier locations, such as Iceland, means their excess heat can be used for other purposes. Furthermore, few are suggesting this energy use is not justified, as the work supercomputers are conducting can be used to help develop environmental protections.

Also, it should be pointed out, that compared to general computer use, supercomputer energy use is miniscule. As Dan Stanzione, executive director of the Texas Advanced Computing Center at the University of Texas at Austin, explains:

“Supercomputers are also just a tiny fraction of all computing. Large data centres in the United States alone would emit about 60 million tonnes, so all the supercomputers in the world would be less than 5 per cent of the power we use for email and streaming videos of cats.”